How AI-Generated Music Is Flooding Streaming Platforms Without Disclosure

AI-generated music is flooding Spotify and Apple Music without disclosure. Discover why 34% of daily uploads are synthetic, how "fake artists" earn millions, and why platforms like Deezer are starting to fight back.

How AI-Generated Music Is Flooding Streaming Platforms Without Disclosure

In the music streaming era, platforms like Spotify, Apple Music, Amazon Music, YouTube Music, Deezer, and others have democratized access to music. But alongside the positive impact of streaming lies a rapidly emerging challenge: AI-generated music that is released, monetized, and consumed without any disclosure, transparency, or clear policies.

This trend is reshaping the industry, and few listeners realize how big it’s already become.

AI Music: The New Norm, and the Hidden One

Advances in generative AI have made it possible for anyone to create complete songs (vocals, instrumentation, and even artist personas) with little to no human input. Tools like Suno, Udio, Mubert, Soundful, Beatoven, and MusicGen can produce high-quality audio at massive scale, lowering the bar for content creation.

This isn’t just academic; AI-generated music is already saturating streaming catalogs:

A report from music industry data shows that approximately 30–34% of daily track uploads to some platforms are fully AI-generated, amounting to tens of thousands of tracks per day.

Deezer estimates around 20,000 fully AI-generated tracks are uploaded daily.

Meanwhile, listeners find it almost impossible to tell the difference: in a survey by Deezer and Ipsos, 97% of participants failed to reliably identify AI music in blind tests.

Despite this ubiquity, major streaming services do not disclose to users whether a track was made by AI. Spotify, for example, has no requirement to label AI-generated music in a user-visible way, even as AI uploads surge and fake artist profiles proliferate.

A Flood Without Labels

Spotify

Spotify has no public policy that requires songs created with generative AI to be disclosed as such to listeners. The platform’s current focus is on spam, fraud, and impersonation penalties, and while it has removed “spammy” tracks and deepfakes, removing over 75 million songs in the past year, it still does not mandate an AI disclosure label.

Spotify has publicly stated it “does not promote or penalize tracks created using AI tools.”

Apple Music & Amazon Music

Like Spotify, neither Apple Music nor Amazon Music has an explicit policy requiring AI usage disclosure on tracks uploaded to their catalogs. These platforms rely on distributors and rights metadata but generally do not surface AI status to listeners.

YouTube Music

YouTube (a major source of streaming via YouTube Music) has some policies requiring disclosure for AI or synthetic content created with its own generative tools. When creators use these tools, YouTube can apply labels, and creators are encouraged to self-declare their use.

Deezer

Deezer stands out as one of the few services to actively detect and label AI-generated tracks. It has implemented a system that identifies fully AI-generated music and tags it, and keeps such tracks out of algorithmic recommendations.

Bandcamp

In late 2025, Bandcamp took an even stronger stance — officially banning AI-generated music from its platform unless it’s minimally assisted by AI (e.g., for cleaning audio rather than composing content).

Fake Artists, Real Streams

The lack of disclosure isn’t just academic; it has real economic and cultural impacts:

Fake Artists with Millions of Streams

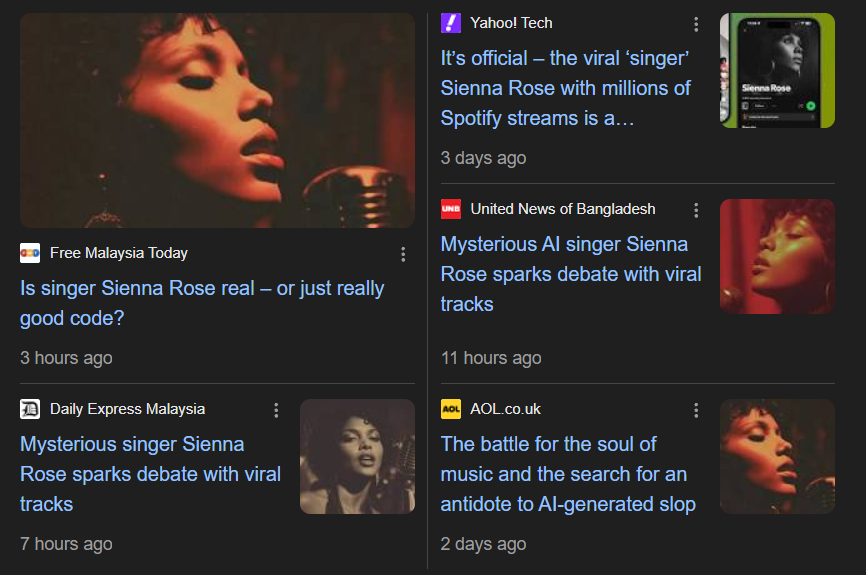

Entire AI-generated “artists” have climbed the streaming ranks without being real musicians. One such AI soul singer, “Sienna Rose,” amassed millions of monthly listeners on Spotify despite being entirely synthetic and fictional.

"Heart on My Sleeve" and Licensing Chaos

In a notable case, an AI-generated song titled “Heart on My Sleeve” racked up hundreds of thousands of streams before being pulled due to copyright concerns about impersonating Drake and The Weeknd.

Chart Bans

Some territories are already pushing back: Sweden recently banned an AI-generated song from its national charts, citing the artist’s lack of genuine humanity.

Fraudulent Streams

Reports show that much of the streaming consumption of AI music is likely fraudulent, artificially inflated by bots to collect royalties, diverting revenue from real artists. In one analysis, up to 70% of AI music streams were flagged as fraudulent.

Fairness, Culture, and Transparency

Competition With Human Artists

When AI tracks flood streaming catalogs without labeling, they compete directly with human creators for attention, playlist placements, and royalties, often with no transparency about their nature. This erodes trust in the streaming ecosystem.

Listener Deception

Most listeners are unaware they’re consuming AI-generated music. Without disclosure, audiences can’t make informed choices about what they value and support.

Economic Distortion

AI tracks generate the same per-stream royalties as human-made music; around $0.003 to $0.005 per stream on Spotify, which means undisclosed AI music can earn money indistinguishably from human artists’ work.

Mandatory AI Disclosure on Streaming Platforms

To preserve artistic integrity and ensure fair competition and transparency, a policy intervention is needed.

Proposal: AI Music Disclosure System

Streaming platforms could adopt clear labelling requirements for tracks created primarily with AI, inspired by existing metadata flags for explicit content:

AI Label Tag:

A visible badge (e.g., “AI-Generated”) alongside explicit and lyric disclaimers on track listings.Metadata Standards:

Mandatory metadata fields submitted by distributors detailing AI tools used and human involvement levels.Algorithm Transparency:

Platforms should clarify how AI tracks are recommended in discovery feeds and whether they are weighted differently.Licensing & Royalty Reporting:

Distinct accounting for AI-generated music to ensure royalties reflect true creative input.

Such measures would empower listeners, artists, and rights holders, rather than leaving discovery and royalties to hidden algorithms.

Conclusion

The rise of AI in music creation is not inherently bad; it can enable new forms of creative expression. But without disclosure policies, streaming platforms are allowing synthetic tracks to blend in with real artistry. This lack of transparency undermines both listeners and creators and fuels a market where quality and authenticity are invisible.

Platforms like Deezer and Bandcamp show that policies are possible.

Now, major players like Spotify, Apple Music, and Amazon Music should follow suit.

Music lovers deserve to know what they’re really listening to.

And artists deserve fairness.

Ready to become a founding member?

Apply for certification todayStay ahead on AI transparency

Join the SiteTrust newsletter to receive updates on AI transparency, new regulations, and practical guides straight to your inbox.